Task-based parallelism is one tool to write such new, highly concurrent software. It phrases programs as sequence of steps including their causal dependencies, but leaves the decision what (aka which task), when and where to execute a task to a task runtime. Task-based codes thus promise to be performance-portable, as a different runtime on a different machine might pick a different schedule.

Introducing tasks (taskification) however is not trivial. It is often done in a trial-and-error fashion and often results in big (performance) disappointments. We propose to abandon the predominant trial-and-error approach and advocate for a data-driven taskification, where:

- Users annotate their codes with information like “this could be a task” or “this could be a parallel loop”.

- Users execute this annotated code to obtain a performance footprint.

- Users pipe the outcome into a tool (a simulator) to visualise and analyse this task graph and obtain some recipes on how to translate their potential task structure into a taskified code.

To realise this research vision, we have to

- establish a task training landscape

- develop a data-driven taskification toolset and workflow

- investigate task features that are missing within mainstream tasking approaches

Research background

We identify four major challenges around tasking:

- Phrasing and rewriting codes in a task language is not trivial. The traditional HPC education and HPC tool ecosystem (performance analysis tools) are historically biased against data decomposition strategies, while tasking advocates primarily produce material on tasking following a tutorial style. We will work towards a training and experience exchange ecosystem. It will be founded in workshops hosting tutorials from different vendors and research groups, bringing in performance analysis tools and their developers, providing a platform for users to report on their experience, highlighting what works and what does not work. Alongside this training, we aim to tackle the problem that the potential pay-off of tasking is often not evident from the outset.

- Task-based codes do not necessarily yield fast or performance-portable codes. Tasking induces an overhead, which can be significant. It is not trivial to predict how tasking runtimes will or should perform and any performance gain could completely change with the next machine or runtime/compiler generation. Balancing loop concurrency and task concurrency can be a delicate challenge. The dependency on external factors, such as library dependencies (does a used library support the tasking model of choice, is the used library MPI thread-safe, …?), the tuning needs of magic tasking hyperparameters (what is the right task granularity or prioritisation, e.g.), and uncertainty around future architectures make bespoke taskification tricky. We will work towards a data-driven taskification workflow where users can estimate the pay-off of tasks before they invest in tasking. We will have an API to model potential task structures and to predict its efficiency for simple machine models. This establishes a workflow where the performance engineering and design precede the code development. Abandoning the idea of a posteriori code tuning and making efficiency a first principle of writing code are part of the required paradigm shift for exascale software development.

- The task paradigm is not a direct fit for the workhorses behind exascale. GPGPUs will facilitate the transition into the exascale era. Heterogeneous architectures will dominate (the first) exascale machine generations. The heterogeneity of computers will likely continue to increase. Even though accelerators become more flexible with every generation and can process different tasks (kernels) in parallel, they perform best for classic BSP-style data parallelism with homogeneous data access patterns. Developers often have to compromise carefully between algorithmic flexibility of tasking and the most efficient code design. Beyond the question of whether code fits the metal, most tasking paradigms furthermore inherently assume that data is “globally” available. This gives the scheduler the freedom to decide where to run a computation. However, we know that data movements will be expensive on exascale machines. We propose to research into solutions to the shortcomings of present-day tasking solutions. Particular emphasis will be put on how fine-grain tasking integrates into distributed/NUMA environments and GPUs. Our groups have successfully prototyped first ideas what such solutions could look like, and we plan to continue and generalise this work.

- Users do not want to rewrite their codes. To make all of the above things worse, the community still lacks a unique tasking standard despite various efforts on the language (SYCL, OpenMP) and API side (Kokkos). Teams thus are hesitant to commit to one particular technology fully. The variety of tasking approaches and tasking runtimes is advantageous as it fosters evolution through competition, and different strengths fitting to different applications remain on the table. It is problematic for teams predominantly committed to domain scientific output as there is no additional credit from their peers for yet another rewrite. On top of this task-intrinsic challenge, we hypothesise that MPI – typically used for data decomposition on a large scale – is here to stay. Despite progress on the PGAS, language and virtualisation side, we foresee that MPI will remain the primary technology behind the majority of exascale codes. All of our activities will allow the user to pick a tasking approach of her choice and stay committed to MPI (as well as with the GPU approach of choice).

Objectives

We believe that a concerted effort is required to tackle the risks mentioned above and to ensure that our existing and upcoming software landscapes harvest the potential promised by the taskification paradigm:

- Establish taskification training and an experience exchange landscape. We propose to run a series of workshops that demonstrate, discuss, and systematically document how to translate implementations into task-based code, how to balance between domain (data) and task (functional) decomposition, which technologies are on the table, and what the classic pitfalls and problems are that the community will want to avoid in the future. The training and reports will help other ExCALIBUR consortia and more widely, other groups in the UK, to integrate tasking into their codes – either evolutionary or as first principle. We have successfully organised training around performance analysis tools before.

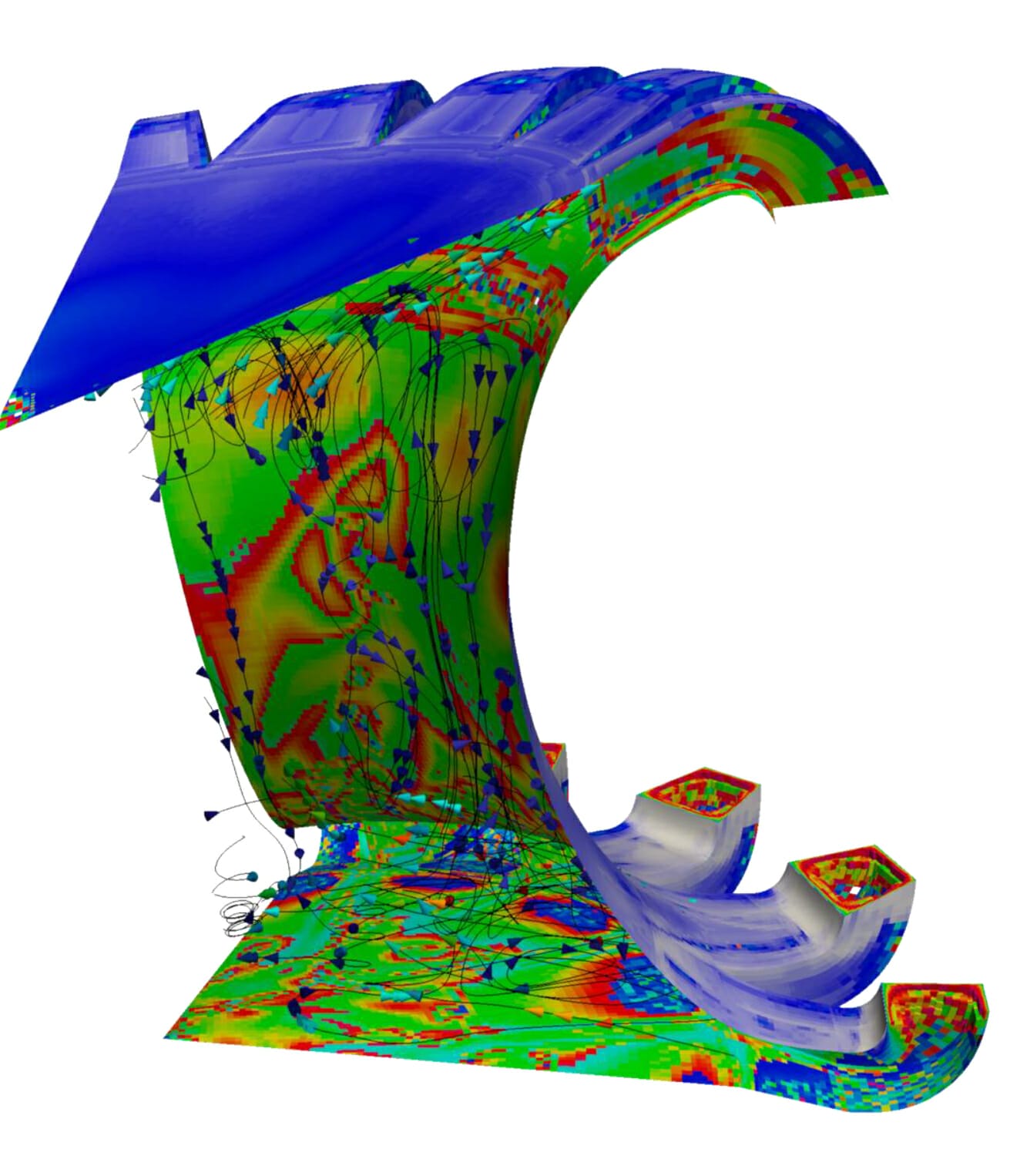

- Data-driven taskification. We plan to advocate for and invest in the use of tools that allow developers to log and characterise their code’s runtime behavior and dependencies before they commit to tasking or classic parallelisation. With a compute fingerprint, we can (i) make informed, i.e. data-driven, decisions on where and where not to use task-based parallelism, (ii) guide and instruct tasking runtimes how to map task codes into fast code, and (iii) explore/simulate upcoming hardware solutions w.r.t. their potential for taskified codes. Item (i) makes the introduction of tasks more manageable, as pros and cons are easier to assess, items (ii-iii) assess the task overhead/pay-off balance and formalise to which degree tasking will or does pay off. Our work, realised in the form of a new analysis tool called Otter (available here), helps to move away from trial-and-error taskification and instead make informed design decisions based on performance models.

- Uncover and develop features that off-the-shelf tasking approaches lack with the aim to integrate them into (existing) MPI/GPU parallelisation. Our consortium has identified three key features that current mainstream tasking systems lack when integrating tasking into existing many-node (MPI) or heterogeneous (GPU) codes. We have prototypically identified ways to realise these missing features. We plan to generalise these ideas and cast them into proper stand-along libraries such that they can be used within existing MPI/GPU environments with the user’s tasking system of choice. Our goal is to make the task paradigm more powerful w.r.t. distributed and heterogeneous architectures where system configurations or characteristics change at runtime (due to I/O or changing energy envelopes, for example) or components fail.

Team

Tobias Weinzierl

Tobias Weinzierl is a full professor in Computer Science at Durham University. He graduated from Technische Universität München (TUM) in Germany, and completed his habilitation at TUM as well.

Tobias’ research orbits around fundamental algorithmic and implementation challenges that are omnipresent in scientific computing: data movement optimisations, the economic usage of bandwidth, memory and compute facilities, the clever orchestration of tasks, the exploitation of abstract models feeding into calculations, … Tobias applies his ideas primarily to multigrid methods, hyperbolic equation system solvers and particle(-in-a-grid) methods. While his work focuses on fundamental algorithms combining high-performance scientific computing and state-of-the-art mathematics, he always asks how to translate these into working, good code. Tobias’ research influences several open source projects which in turn are used in computational physics, earth sciences and engineering.

Marion Weinzierl

Marion is a Research Software Engineer in the Advanced Research Computing unit at Durham University and the RSE Team Leader of the N8 Centre of Excellence for Computationally Intensive Research (CIR), as well as a trustee of the Society of Research Software Engineering. She has a degree in Media Informatics and a PhD in Scientific Computing. Previously she worked both in academia and in industry in computational physics (in various forms).

Adam Tuft

Adam is a part-time Research Assistant in the Department of Computer Science at Durham University and is also completing his MSc in Scientific Computing and Data Analysis (MISCADA) at Durham. He recently submitted his dissertation on tracing and visualising parallel-for and task-based OpenMP programs. He previously spent 3.5 years working in Financial Services after receiving his BSc in Physics and Astrophysics from the University of Sheffield.

Partners and friends

Durham’s Computer Science department, Durham’s Advanced Research Computing, Durham’s Physics department, Hartree

How to get involved

The task team is always open to collaborations – both within ExCALIBUR and the whole international community. Most of our work is discussed internally on Slack. Please contact the PI or our Knowledge Exchange Coordinator via Email to be added to our Slack