Next Generation Observation Processing System

To accurately predict future weather, it is first necessary to properly initialise the numerical weather prediction model. The best estimate of the current atmospheric state (known as the analysis) is constructed using a vast network of a wide range of observations and observation types. Examples are satellite radiances, measurements from commercial airplanes, surface instruments, radar measurements, and radiosonde profile of temperature. The resulting analysis is then compared with a previous short-range weather prediction through a process called data assimilation. The quality of the analysis is influenced by many factors, one of which is the quality of the observation used in the process.

The UK’s current observation processing system is based on strong science. However, its software implementation is now showing its age and, in particular, it is not expected to perform well on future supercomputers.

This is where our next generation Observation Processing system comes into play. By adopting the JEDI code framework (Joint Effort for Data assimilation Integration) developed by the US’s Joint Center for Satellite Data Assimilation we have been able to develop a modern JEDI-based Observation Processing system (known as JOPA). JOPA is based on modern programming principles and provides improved modularity and flexibility,

This new software infrastructure will facilitate future scientific improvements to produce a more accurate analysis and to improve observation usage (for example, by potentially allowing us in the future to harvest serendipitous observations from the Internet of Things). It will help put the UK in an ideal place to exploit the opportunities that upcoming changes in supercomputer landscape will offer to improve the quality of our weather and climate predictions.

Author and Lead: David Simonin

Edited by Nigel Wood

Marine Systems (WAVEWATCH III) design

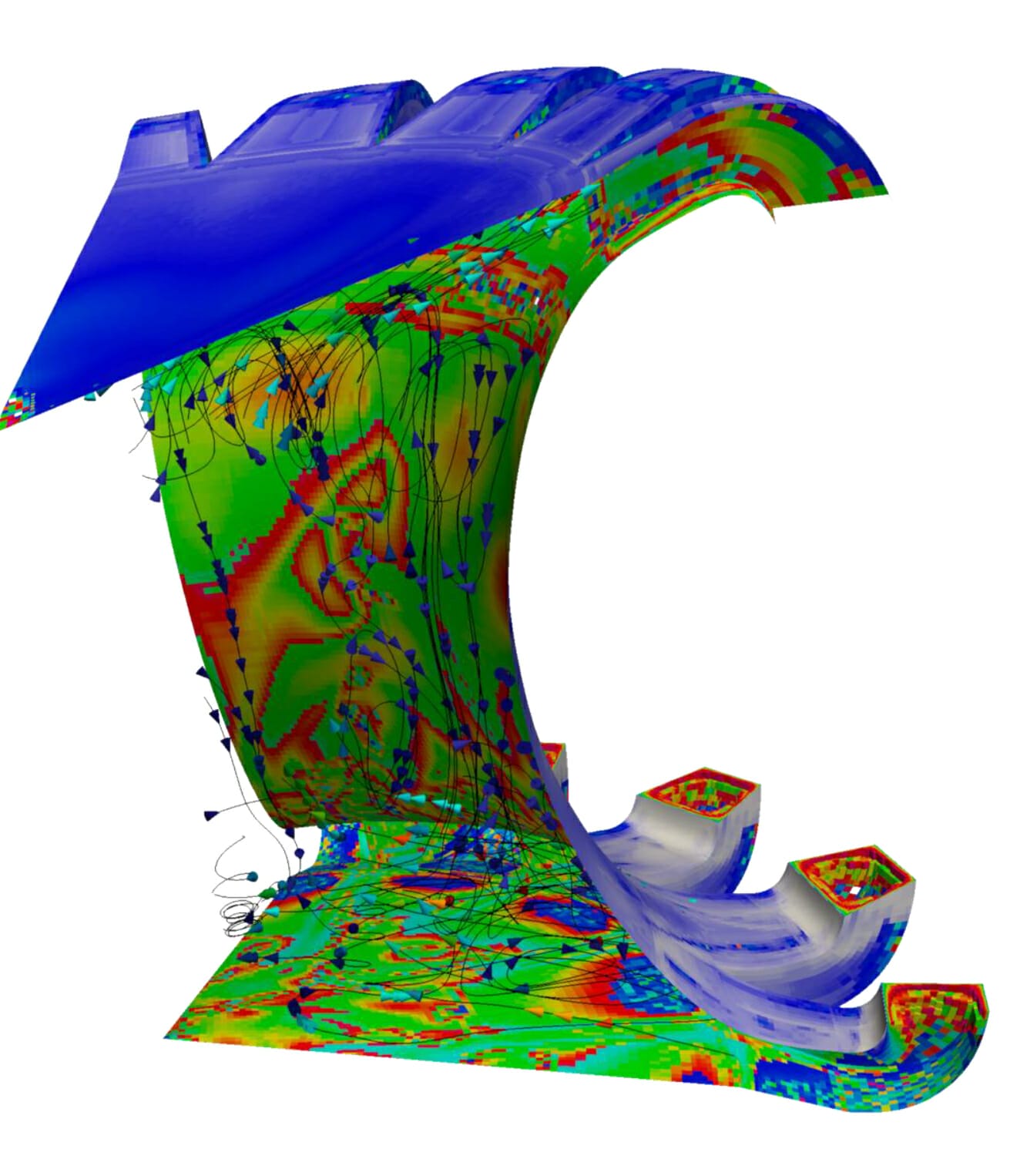

Prediction of ocean waves is essential to planning safe marine operations and issuing warnings of coastal flood hazards. The Met Office, and numerous other operational centres worldwide, generate wave forecasts using the WAVEWATCH III third generation wave model, which is a community model co-developed by an international community of wave modelling scientists. WAVEWATCH III already has application for Central Processing Unit (CPU) based computers, using Message Passing Interface (MPI) and Open Multi-Processing (OpenMP) parallelization. This activity will research and develop modifications to the WAVEWATCH III wave model that will provide a significant extension to its flexibility and capability by enabling it to efficiently exploit shared memory architectures, focusing on adaptation of the model to run on Graphical Processing Units (GPU). The activity will also deliver a plan for how the model code, following Separation of Concerns principles, could be refactored to enable (semi-automated) adaptation and compilation of the code across the range of architectures we might expect on the next generation of high-performance computers.

Author and Lead: Andy Saulter

Verification system components design, integration and testing

As part of the Next Generation Modelling Systems (NGMS) programme at the Met Office, the Next Generation Verification (NG-Ver) project team are replacing their current operational verification software (VER) and adopting a new, multi-faceted verification toolkit, Modelling Evaluation Tools (MET). MET’s primary attraction is its ability to verify LFRic’s cubed sphere grid. Within MET’s umbrella of software tools, METplus wrappers have been purpose built to improve MET’s usability and promote collaboration between colleagues through the tool’s highly configurable, and modular nature. The ExCALIBUR project team have been tasked with determining the best practice for METplus’ integration within the Met Office and to aid with enhancing the capabilities of VerPy, the Met Office’s verification visualisation software, to manipulate and visualise METplus output.

The project kicked off in 2020 with an investigation of the METplus wrappers’ capabilities, reporting on the best path for integrating the toolset with the Met Office’s current systems. A written report and presentation were provided to NG-Ver and the wider Met Office community whose work will be impacted by the change. With a best path forward determined through consultation with multiple teams across the office, the project has developed to determine how to run METplus tools in an optimal fashion within a Rose/Cylc suite (the Met Office’s workflow scheduler software). The main priorities throughout the project have been on maintaining project transparency, which is seen as a key component of change management, and on improving suite design focussing on, Usability (through use of metadata, video tutorials and written user guides), Performance (with smart data searches, task parallelisation and comparison testing with VER output) and Functionality (selecting, testing, and integrating the most relevant tools within the METplus toolset). The project’s main objective on suite design is on track for delivering in March 2022, with aid to the VerPy software ongoing until September 2022. Collectively, these objectives will provide a high-quality set of verification tools for the science community at the Met Office as we move towards the Next Generation of Modelling Systems.

Lead: Philip Gill

Author: Joseph Abram

Data assimilation algorithm research

The skill of weather forecasts from a numerical weather prediction model is highly dependent on the accuracy of the initial conditions: the better the knowledge of key atmospheric and surface fields (e.g., winds, temperature, pressure, cloud cover) at the start of the forecast the more accurately the evolution of these fields can be predicted over a period of a few days. An effective strategy to estimate reliable initial conditions is to combine the information provided by short-range forecasts and by available observations, using a process known as data assimilation. Operational meteorological centres such as the Met Office assimilate millions of observations taken all over the world using sophisticated numerical algorithms that require the use of world-class supercomputers. In this activity we aim to develop efficient and trusted methods to estimate the uncertainty of model forecasts to be combined with that associated with observations. Such methods aim to approximate the statistical distribution of forecast errors using a finite sample of short-range forecasts or a forecast “ensemble”. We will also implement a numerical – known as “adjoint” – model to back-propagate the information from observations at the time they were made to the initial time of the forecast so that subsequent forecasts are iteratively more accurate. Finally, this activity will extend the capability of the Joint Effort for Data assimilation Integration (JEDI) framework used to develop the Met Office next-generation data assimilation system. In particular, we will implement a method that can more effectively assimilate complex observations such as those requiring nonlinear operators for their simulations from short-range forecasts.

Author and Lead: Stefano Migliorini

Coupling multiple components as a single executable

The NGMS coupling project [The Next Generation Modelling Systems Programme – Met Office] is building an atmosphere-ocean-ice-land climate model using the new Met Office atmosphere modelling system, LFRic.

The aim of this ExCALIBUR project is to refactor the top-level control program that launches execution of the different components of the earth system on a supercomputer.

One technical difficulty of multi-components/multi-physics coupled software systems is that different modelling components (atmosphere or ocean) can have very different computing requirements. For instance, some processes may require much more memory than others to execute; some may use more threads per process. Typical supercomputer operating and runtime systems do not support well these technical requirements. We are exploring the limitations and potential for improvements of computer resource allocations within a climate model.

Lead and Author: Jean-Christophe Rioual

Framework for application input configuration and validation

Background

Next-generation weather prediction and climate projections rely on complex systems using a variety of codebases owned, developed, and maintained by different (but overlapping) communities. Each of these communities use their own approach to develop and configure their code and its input and use their own coding standards. The correct configuration and development of an application and its inputs using any one of these code bases can rely on detailed domain-specific knowledge. Whilst experts are expected to have this knowledge for their domain, it’s difficult for developers and users of these systems to acquire this level of expertise across many applications.

Rules written by application specialists in the form of schemas (aka configuration metadata) can be used to ensure that user-defined inputs to applications are both appropriate and consistent. This includes (but is not limited to) what inputs are required, how these depend on the values of other inputs and that each input is of the correct data type and length. Such schemas can then be used to aid users not experienced in the use of these applications by constraining inputs and providing description, help, application default values, etc. Use of schemas in current systems is not universal and they are often written manually to be consistent with the code base, an approach which is hard to maintain and error prone.

One way to address this being widely adopted by next-generation codes is for the metadata contained in schemas to be automatically derived from single sources of truth, either using information held in the code bases to constrain the inputs or using separate schemas to both constrain the inputs and generate the code used to read and validate it. Whilst we can influence the developers of the code bases that we use to adopt a single source of truth, we must accept that the way they wish to do this will be different between different code bases. The challenge therefore is to be able to use the schema generated from these different sources of truth as part of a common user experience.

Activity

This work is developing a common user experience for working with application configurations in YAML and compatible formats. An important feature is the usage of schemas, which are derived from single sources of truth, using a standard such as JSON Schema. We plan to apply schemas to validate application configurations, and to improve the user experience when editing, by:

- Presenting users with a common experience when they work with Cylc workflows, of which the latest UI is a Jupyter Server application.

- Allowing users to spawn more powerful editors/development environments (e.g., Visual Studio Code) consistent with that used by the community for that code base.

- Where applicable, presenting users with a simplified graphical interface that will be similar for applications using different code bases.

Lead Stephen Oxley Author Stephen Oxley and Matt Shin

I/O Infrastructure investigations

Simulation systems that are targeting exascale supercomputers have ever more complex data requirements. The data serving these requirements need to come from somewhere and there is both a danger of error and a significant overhead in relying on human intervention for all aspects. Therefore, this activity will investigate how such a system can identify its own requirements based on the science present in that system in a flexible and maintainable way. Building on this, the information can then be used to configure initial conditions for a model component to meet those requirements, given input data from another model component (which may be running with different science that has different data requirements). It will do that in the context of the Weather and Climate Prediction Use Case, but its results are expected to be generally applicable. This will be done by examining previous work in the area, including how existing systems are designed and what requirements they are expected to meet, and then proposing a design in line with good software engineering principles (e.g., single source of truth), and prototyping that solution with a view to providing a flexible self-configuring prognostic configuration system for the Met Office’s LFRic atmosphere model.

In addition to determining what data should be ingested into a modelling system, it is important to read and write that data in a scalable way. The second part of this activity will therefore review the performance of various input/output (I/O) technologies. The review will include both data-store aware technology and more conventional I/O libraries, including but not limited to the XML-IO-Server (XIOS) which is currently used by the Met Office’s LFRic model. The Met Office’s current global Numerical Weather Prediction (NWP) model will be used as a relevant case study; this will benchmark XIOS whilst running a similar output profile to the Met Office’s current deterministic global model configuration. This case study will also investigate the performance of offloading the creation and re-gridding of diagnostics onto the I/O system rather than in the core model.

Lead and author: Stuart Whitehouse

Contact: Ryan Boult

Workflow design and analysis

This activity will investigate methods of analysing and optimising workflows for a system as a whole, attempting to prevent the problem of improving the performance of one application having a detrimental effect on another, and ensure better performance for an entire workflow. As a case study we will be working with the Met Office’s next generation global model, which is expected to be exascale-ready. As part of this process, we will also review changes to that workflow with a view to developing expertise in the wider community in developing efficient workflows in an exascale world. An appropriate choice of hardware for a particular workflow (or component of a workflow) depends on the answer to a variety of questions on resource usage, for example, wall-clock time, the number of nodes/cores, the peak memory required, disk storage space, and I/O bandwidth. Each of these has a cost, whether that is opportunity cost (the cost of using a resource for this workflow rather than another workflow), cost in electricity or CO2 emissions, or actual monetary cost (which is more explicit for cloud computing platforms such as Azure or Amazon Web Services). Making good choices here enables us to make better use of the hardware available at any given time. We will investigate ways of instrumenting workflows and reporting on these costs and developing metrics to enable research software engineers to make sensible choices about optimisation and hardware.

Lead and author: Stuart Whitehouse

Contact: Ryan Boult

Containers

As workflows become more complicated, they introduce more complex dependencies. These may prove difficult to manage, and act as a barrier to portability of a workflow between different hardware. One way of managing the myriad libraries our software requires and improving portability is to use a container, such as Docker or Singularity. This activity covers investigating the performance impact of running a distributed memory code in a container. The use of a container may have performance implications; in some cases (e.g., for small-scale code development) this might be acceptable, but for time-critical applications this could prove to be a significant issue. This activity will use the Weather and Climate Prediction Use Case, aiming to provide a container implementation to compile and run the Met Office’s next generation LFRic atmosphere model and then test its performance in a container in an HPC environment.

Lead and author: Stuart Whitehouse

Contact: Ryan Boult